Agentic AI R&D

for Enterprise Bespoke Software Development

We're rethinking how enterprise software is engineered — using Agentic AI to orchestrate distributed teams of intelligent agents across the SDLC.

Our current focus lies in research, experimentation, and laying the groundwork for enterprise-scale adoption - with a foundation built on the Microsoft technology stack.

Powered by:

Our vision, story and values

At ACortex, we believe software projects can be both innovative and efficient when powered by agentic AI. In this architecture, autonomous

agents work towards well-defined goals, collaborating with each other and with human experts to deliver high-impact results.

The outcome is a more agile and versatile team—one that focuses human creativity where it matters most.

Early Exploration

We began by exploring how AI could automate and enhance projects involving development teams of over 100 people, across all roles in a softeare project: BAs, Devs, QAs, PMs...

R&D Focus

We're actively developing and refining a modular Agentic AI platform. Our current phase is focused on discovery, iterative validation, and testing the limits of multi-agent coordination.

Future-Ready

In a landscape where new developments emerge every day, we invest our time in exploring cutting-edge Agentic AI architectures and models to stay ahead of rapid technological shifts.

Transparency

We don’t make impossible claims. Our approach is grounded in evidence and experience, not speculation.

Collaboration

We pair AI Agents with top-tier human specialists for rigorous validation and creative insight.

Privacy & Security

Privacy is a must. Sensitive data is handled within secured environments and on-premises air-gapped AI stations.

Ongoing projects

We operate across multiple areas of software development to revolutionise execution — reducing repetitive workloads, improving accuracy, enhancing quality, increasing safety, cutting costs, and accelerating delivery. These are early-stage R&D projects — not commercial features or services (yet).

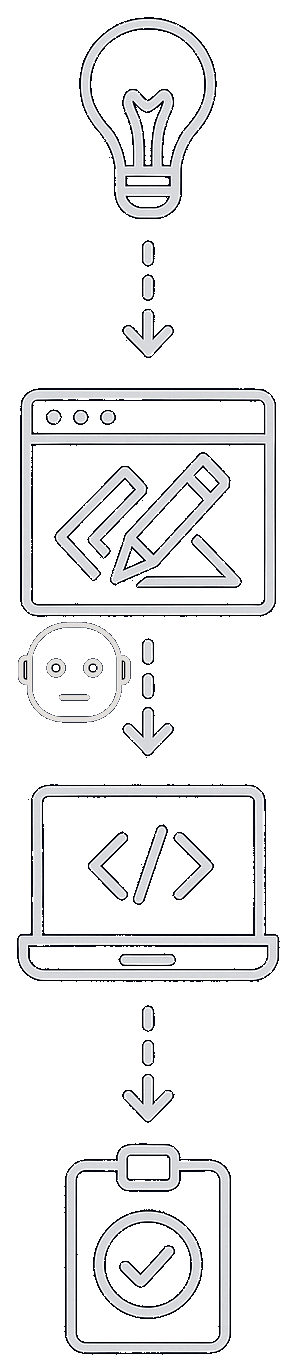

Agentic AI Enterprise Software engineering

We're designing Agentic development teams to eventually deliver production-ready enterprise software solutions faster, with higher quality, greater control, and lower cost.

- Dedicated roles for requirements analysis, architecture design, coding, testing, security review, user experience, and more.

- Agents collaborate in real time, exchanging structured knowledge graphs to keep every task aligned with the software project goals.

- Senior engineers provide rapid strategic oversight, sign-off, and domain expertise — ensuring clear accountability.

- Run the entire agent stack inside your own data centre for total data sovereignty (ISO 27001 & GDPR aligned).

- Prefer elasticity? Burst securely into the cloud or adopt a hybrid pattern with zero-trust encrypted channels.

- Kubernetes-native micro-services make switching environments friction-free.

- Agents generate clean, peer-reviewed code, infrastructure-as-code manifests, test suites and user documentation.

- Every commit carries an immutable provenance record and explainability trace, meeting even the strictest AI governance standards.

- Integrated CI/CD pipelines ship features in days, not months, with roll-back safety nets and blue-green releases.

- Interactive checkpoints enable stakeholders to accept, refine, or redirect the agents’ output in near real time.

- Visual dashboards surface agent reasoning paths, compliance flags and performance metrics for non-technical reviewers.

- Post-launch, monitoring agents track KPIs, user feedback, and drift, automatically raising improvement tickets.

- We iteratively refine system performance through agent orchestration, prompt design, and configuration tuning — optimising for cost, latency, and energy usage while supporting OPEX and ESG goals.

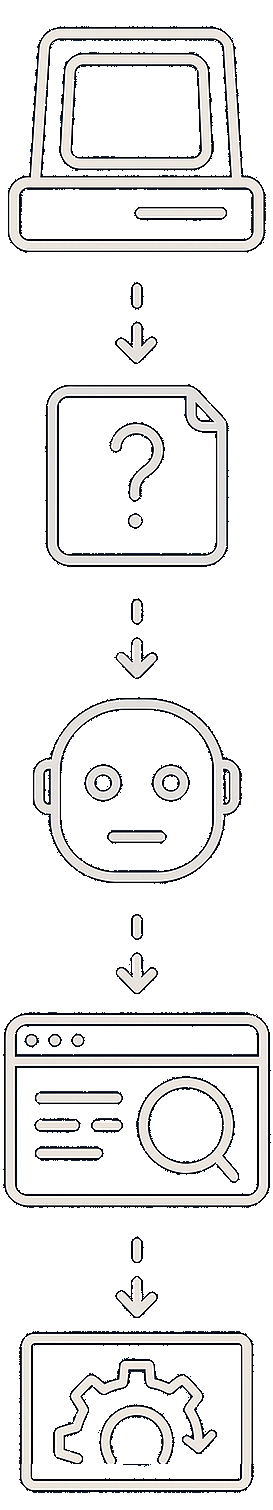

Agentic AI Legacy systems reverse-engineering & Documentation Recovery

- Dedicated roles for reverse engineering, architecture recovery, process modelling, UI/UX interpretation, and documentation generation.

- Agents collaborate in real time, exchanging structured knowledge graphs to align technical details with inferred business intent.

- Senior analysts supervise output, validate edge cases, and ensure domain accuracy across the reconstructed artefacts.

- Run the entire agent stack inside your own data centre for total data sovereignty (ISO 27001 & GDPR aligned).

- Prefer elasticity? Burst securely into the cloud or adopt a hybrid pattern with zero-trust encrypted channels.

- Kubernetes-native micro-services ensure smooth integration into existing IT environments.

- Agents analyse source code, UI flows and legacy artefacts to generate complete, human-readable documentation.

- Output includes architectural diagrams, inferred requirements, data flow documentation, and system interaction overviews.

- Every artefact is versioned, annotated with confidence metadata, and aligned with compliance or audit needs.

- Interactive checkpoints enable teams to review and refine inferred documentation and system interpretations.

- Visual dashboards expose agent reasoning, documentation coverage, and potential knowledge gaps for expert input.

- Post-analysis, agents monitor codebase evolution and update documentation as changes are detected.

- We're exploring human-AI co-review cycles to help keep documentation accurate, relevant, and usable over time.

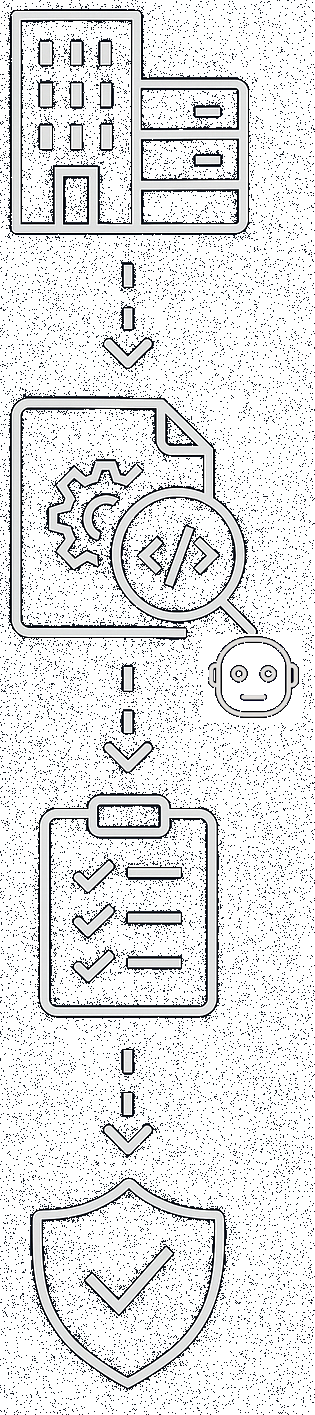

Agentic AI Software System Code Audits

- AI agents scan the entire codebase to identify code smells, anti-patterns, dead code, dependency vulnerabilities, and licensing conflicts.

- Agents model the actual runtime behaviour, checking for state inconsistencies, race conditions, unused endpoints, and performance bottlenecks.

- Results are summarised in human-readable reports, with risk scores and actionable refactoring suggestions prioritised by impact.

- Our security agents detect injection risks, data leaks, misconfigurations, API surface vulnerabilities, and access control flaws across the stack.

- Infrastructure-as-code and environment files are included, enabling infrastructure-layer audits (e.g., open ports, unencrypted traffic, key mismanagement).

- We're working towards mapping audit outputs to compliance standards (e.g., OWASP Top 10, NIST, ISO 27001) and include reproducible proofs-of-risk.

- AI agents evaluate documentation coverage, code ownership gaps, test coverage drift, and regulatory audit readiness.

- The project explores generating dashboards that offer a comprehensive view of system health, maintainability metrics, and legacy debt concentration zones.

- Suggested remediations include cost estimates, risk-reduction scores, and transformation timelines.

Frequently Asked Questions

If you don't find your answer here please don't hesitate to contact us using the form below

They improve productivity but rely heavily on the developer’s oversight and guidance.

Enterprise-grade code generation, on the other hand, involves structured, multi-agent AI systems that collaboratively plan, write, validate, and document code at scale.

These systems follow strict architectural guidelines, quality standards, and security protocols—designed for team-based software development with traceability, compliance, and production readiness in mind.

Large language models can sometimes simplify, over-engineer, or skip essential quality steps when given too much freedom. By guiding the AI in advance, we aim to ensure the output aligns with established architectural, quality, and maintainability standards.

That’s why we pair our AI agents with highly skilled human professionals who provide oversight, validation, and quality assurance at every step.

Contact Us

If you’d like to reach out — whether to ask a question, put forward a

collaboration

, suggest an improvement, or

contribute

a new entry to our FAQ

we’d love to hear from you.

Simply complete the form below and our team will get back to you as soon as possible.